| | |

|

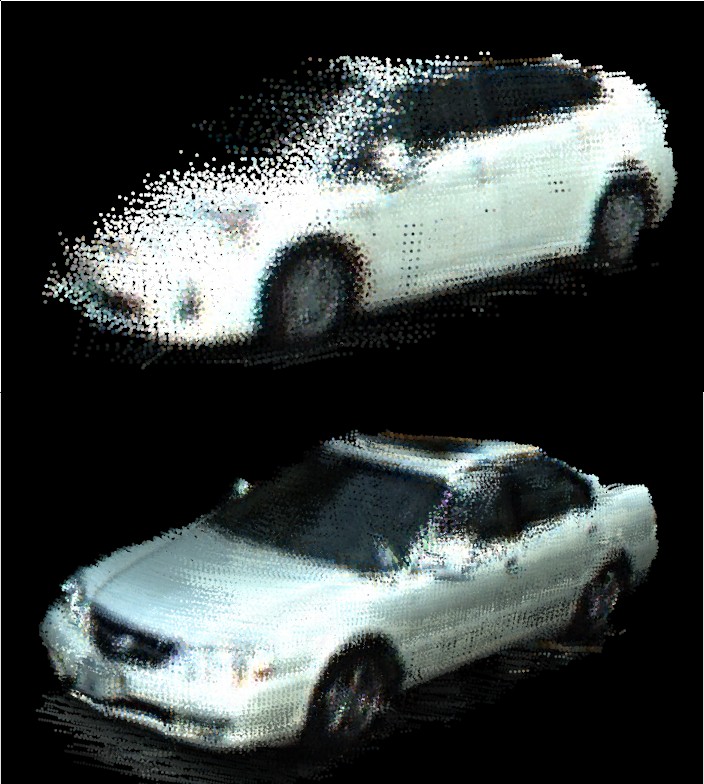

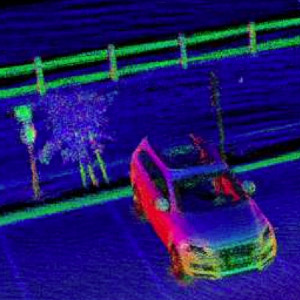

Combining 3D Shape, Color, and Motion for Robust Anytime Tracking

David Held, Jesse Levinson, Sebastian Thrun, Silvio Savarese.

Robotics: Science and Systems (RSS), 2014.

Improving on our ICRA 2013 paper, this new approach enables real-time probabilistic object tracking. Now, computational time is allocated dynamically according to the shape of the track’s posterior distribution. The algorithm is “anytime”, allowing speed or accuracy to be optimized based on the needs of the application. We currently use this method to track all dynamic obstacles seen by our autonomous vehicle, in real-time, with significantly improved accuracy compared to our previous Kalman-filter based approach.

pdf (rss),

Project Page - Supplementary material, C++ code, poster, presentation,

bib

|

|

Group Induction

Alex Teichman and Sebastian Thrun.

Proc. of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2013.

Tracking-based semi-supervised learning, as originally presented at RSS2011, was an offline algorithm. This is fine in some contexts, but ideally a user could provide new hand-labeled training examples online, as the system runs, without retraining from scratch. Qualitatively, this would mean the ability to point out - from the back seat of your autonomous car - a few examples of, say, an elliptical bike or sk8poler, and the algorithm would start learning to recognize them on the fly without you having to do anything else. Group induction is a mathematical framework for this kind of learning.

pdf,

bib

|

|

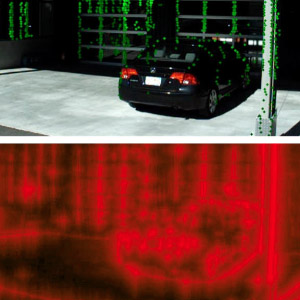

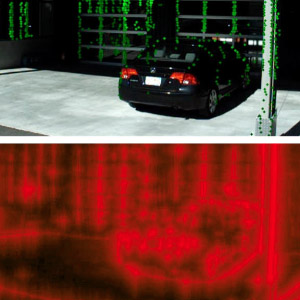

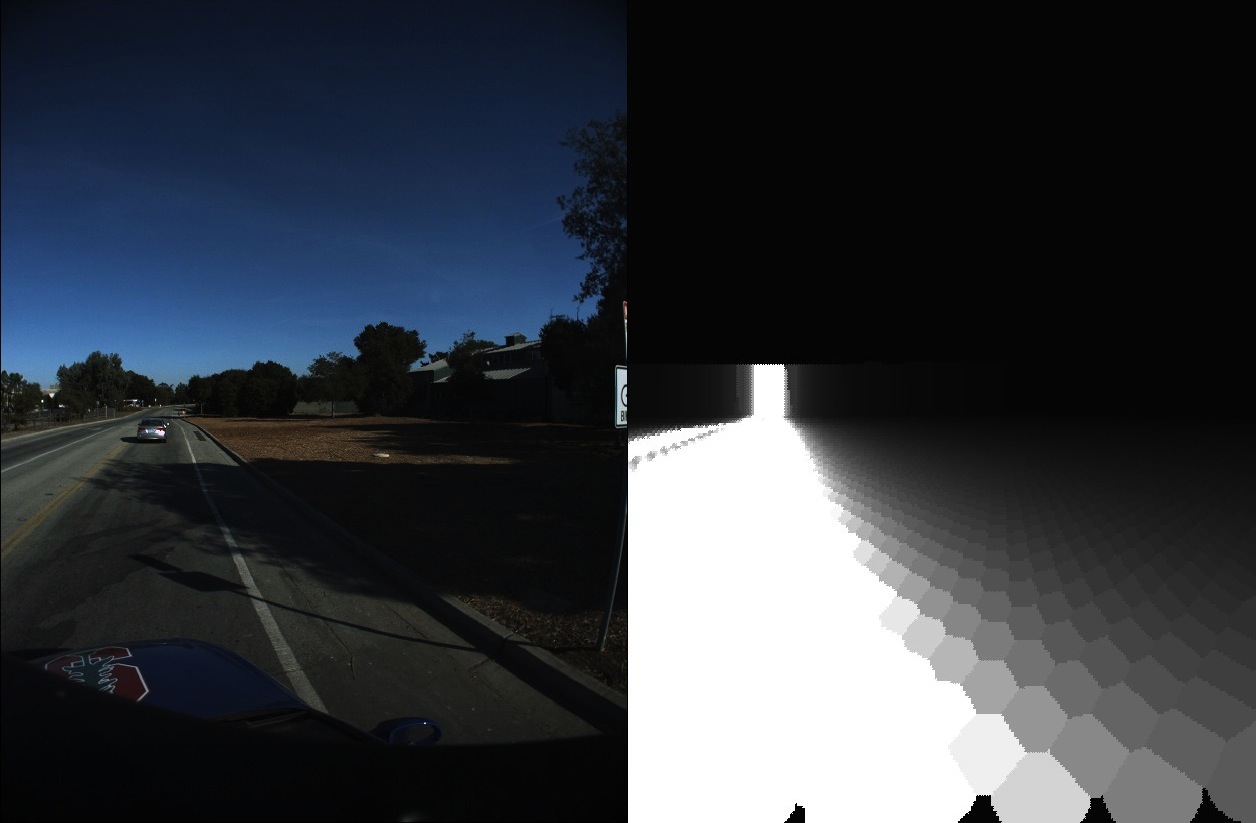

Automatic Online Calibration of Cameras and Lasers

Jesse Levinson and Sebastian Thrun.

Robotics: Science and Systems (RSS), 2013.

Extending previous work on offline 6-DOF calibration

of 3D laser sensors to 2D cameras, this paper presents two new real-time

techniques that enable camera-laser calibration online, automatically,

and in arbitrary environments. The first is a probabilistic monitoring

algorithm that can detect a sudden mis-calibration in a fraction of a second.

The second is a continuous calibration optimizer that adjusts transform

offsets in real time, tracking gradual sensor drift as it occurs.

Together, these techniques allow significantly greater flexibility

and adaptability of robots in unknown and potentially harsh environments.

pdf,

bib

|

|

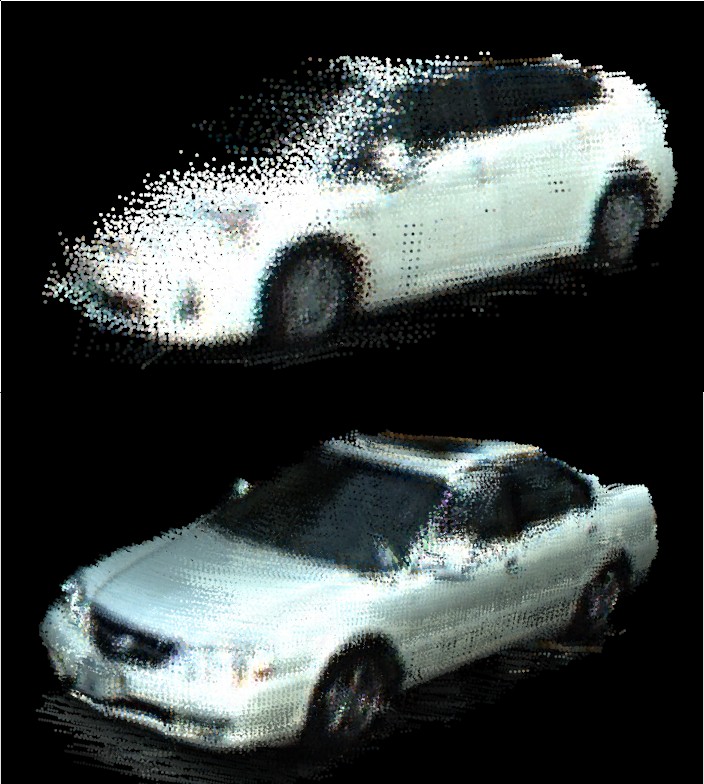

Precision Tracking with Sparse 3D and Dense Color 2D Data

David Held, Jesse Levinson, and Sebastian Thrun.

International Conference on Robotics and Automation (ICRA), 2013.

Precision tracking is important for predicting

the behavior of other cars in autonomous driving. We present a novel

method to combine sparse laser data with a high-resolution camera image

to achieve accurate velocity estimates of moving objects. We present

a color-augmented, pre-filtered grid search algorithm to align the

points from a tracked object, thereby obtaining much more precise

estimates of the tracked vehicle’s velocity than were possible with

previous methods.

pdf,

bib

|

|

Automatic Calibration of Cameras and Lasers in Arbitrary Scenes

Jesse Levinson and Sebastian Thrun.

International Symposium on Experimental Robotics (ISER), 2012.

This paper presents a new algorithm for automatically

calibrating cameras to multi-beam lasers on a mobile robot given a

series of frames from both sensors. Our method does not require the

use of a known calibration target, nor does it require any hand

labeling of correspondences. Even without these requirements, by

leveraging unsupervised data, it still outperforms previous

state-of-the-art calibration techniques by a significant margin.

bib

|

|

Online, semi-supervised learning for long-term interaction with object recognition systems

Alex Teichman and Sebastian Thrun.

Invited talk at RSS Workshop on Long-term Operation of Autonomous Robotic Systems in Changing Environments, 2012.

Tracking-based semi-supervised learning, as originally

presented at RSS2011, was an offline algorithm. This is fine in some

contexts, but ideally a user could provide new hand-labeled training

examples online, as the system runs, without retraining from scratch.

Qualitatively, this would mean the ability to point out - from the

back seat of your autonomous car - a few examples of, say, an

elliptical bike or

sk8poler, and tracking-based semi-supervised learning would start learning to

recognize them on the fly without you having to do anything else.

This talk discusses some preliminary work in this direction.

presentation

|

|

Tracking-based semi-supervised learning

Alex Teichman and Sebastian Thrun.

International Journal of Robotics Research (IJRR), 2012.

Extended journal version of previous work with the same title.

More experiments, more intuition as to how the method works.

pdf (sage),

bib

|

|

A Probabilistic Framework for Object Detection in Images using Context and Scale

David Held, Jesse Levinson, and Sebastian Thrun.

International Conference on Robotics and Automation (ICRA), 2012.

Detecting cars in real-world images is an important

task for autonomous driving, yet it remains unsolved. The system

described in this paper takes advantage of context and scale to build

a monocular single-frame image-based car detector that significantly

outperforms previous state-of-the-art methods. By using a calibrated

camera and localization on a road map, we are able to obtain context

and scale information from a single image without the use of a 3D laser.

pdf,

bib

|

|

Practical object recognition in autonomous driving and beyond

Alex Teichman and Sebastian Thrun.

IEEE Workshop on Advanced Robotics and its Social Impacts (ARSO), 2011.

This paper gives an overview of the recent object recognition

research in our lab and what is needed to make it a fully functional,

high accuracy object recognition system that is applicable beyond

perception for autonomous driving.

pdf,

bib

|

|

Tracking-based semi-supervised learning

Alex Teichman and Sebastian Thrun.

Robotics: Science and Systems (RSS), 2011.

Building on previous work, we introduce a simple semi-supervised

learning method that uses tracking information to find new, useful training

examples automatically. This method achieves nearly the same accuracy

as before, but with about two orders of magnitude less human labeling effort.

pdf,

bib,

project,

RSS proceedings

|

|

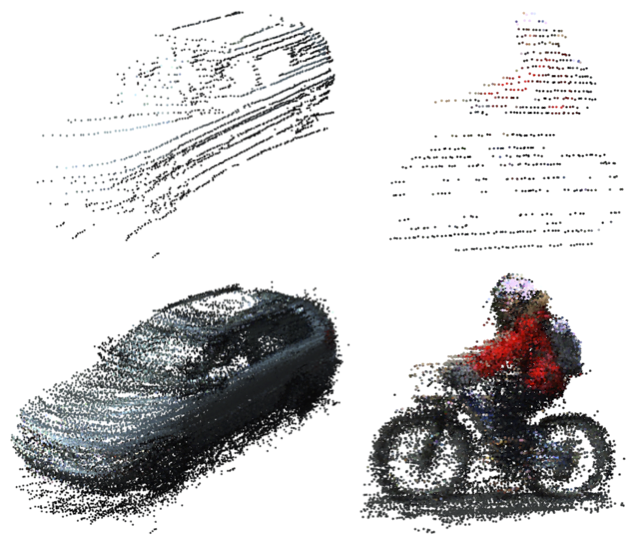

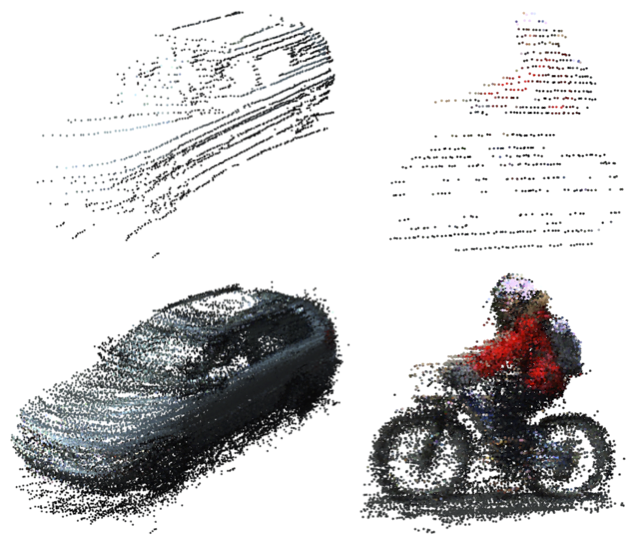

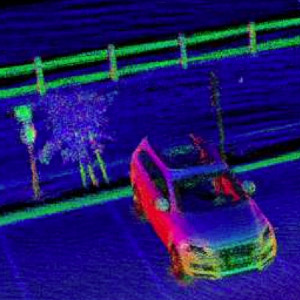

Towards 3D object recognition via classification of arbitrary object tracks

Alex Teichman, Jesse Levinson, and Sebastian Thrun.

International Conference on Robotics and Automation (ICRA), 2011.

Breaking down the object recognition problem into segmentation,

tracking, and track classification components, we show an accurate and

real-time method of classifying tracked objects as car, pedestrian,

bicyclist, or 'other'.

pdf,

bib,

dataset

|

|

Towards fully autonomous driving: systems and algorithms

Jesse Levinson, Jake Askeland, Jan Becker, Jennifer Dolson, David Held,

Soeren Kammel, J. Zico Kolter, Dirk Langer, Oliver Pink, Vaughan Pratt,

Michael Sokolsky, Ganymed Stanek, David Stavens, Alex Teichman,

Moritz Werling, and Sebastian Thrun.

Intelligent Vehicles Symposium, 2011.

This paper is a broad summary of recent work on Junior,

Stanford's autonomous vehicle. Topics covered include object recognition,

sensor calibration, planning, control, etc.

pdf,

bib

|

|

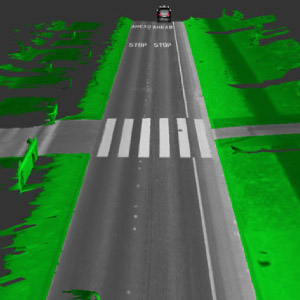

Traffic Light Mapping, Localization, and State Detection for Autonomous Vehicles

Jesse Levinson, Jake Askeland, Jennifer Dolson, and Sebastian Thrun.

International Conference on Robotics and Automation (ICRA), 2011.

We present a passive camera-based pipeline for

traffic light state detection using imperfect vehicle localization

and assuming prior knowledge of traffic light location. To achieve

robust real-time detections in a variety of lighting conditions,

we combine several probabilistic stages that explicitly account for

the corresponding sources of sensor and data uncertainty.

pdf,

bib

|

|

Automatic laser calibration, mapping, and localization for autonomous vehicles

Jesse Levinson.

Thesis (Ph.D.), Stanford University, 2011.

This dissertation presents several related algorithms

that enable important capabilities for self-driving vehicles. These include

offline mapping and online map-based localization techniques using GPS, IMU,

and lasers, online localization without a prerecorded map as used in the

DARPA Urban Challenge, intrinsic and extrinsic calibration algorithms

for multi-beam lasers, and realtime detection of traffic lights.

pdf,

Stanford Library,

bib

|

|

Unsupervised Calibration for Multi-beam Lasers

Jesse Levinson and Sebastian Thrun.

International Symposium on Experimental Robotics (ISER), 2010.

This paper introduces an unsupervised solution

for solving the intrinsic and extrinsic calibration properties of a

multi-beam laser on a mobile robot in arbitrary, unknown environments.

By defining and optimizing an objective function that rewards

3D consistency between points seem by different beams, we are able

to calibrate internal angles, range offsets, and remittance response

curves for each beam in addition to the 6-DOF position of the laser

relative to the vehicle's inertial frame.

pdf,

bib

|

|

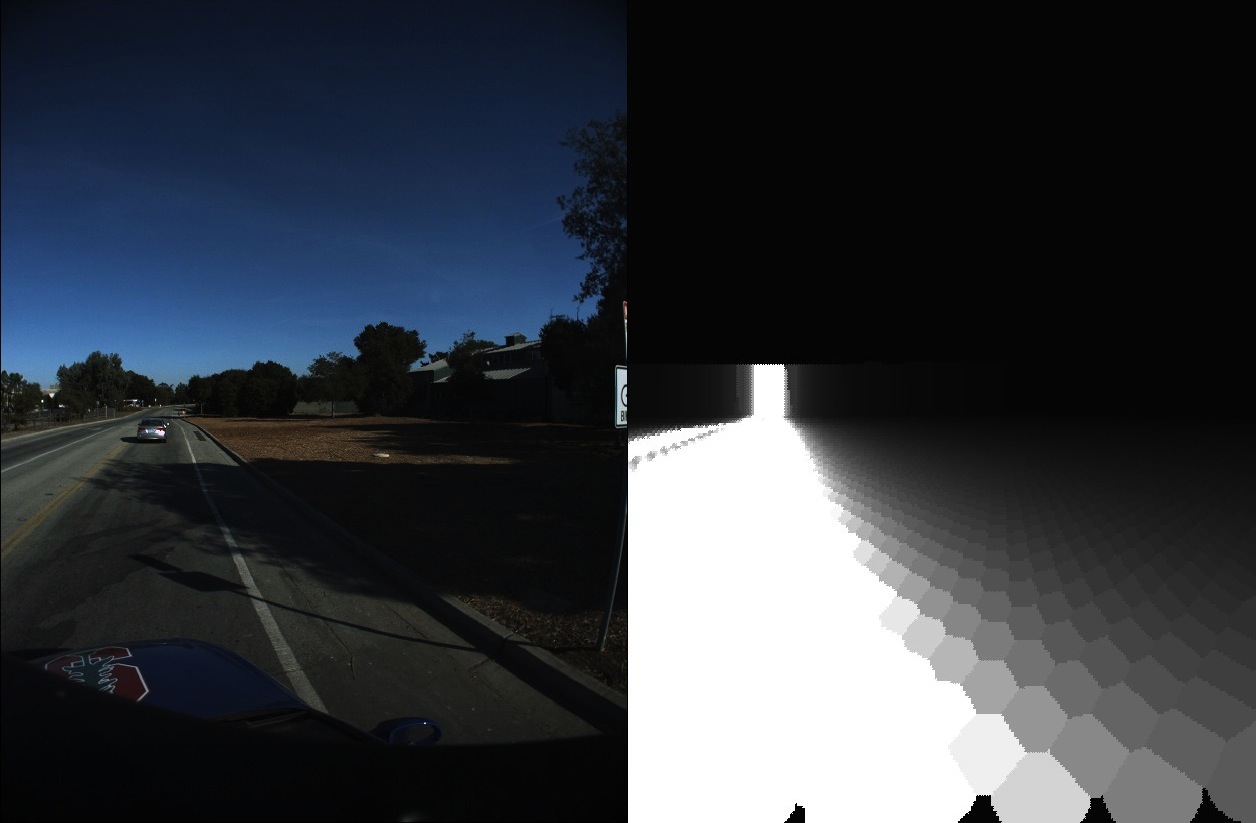

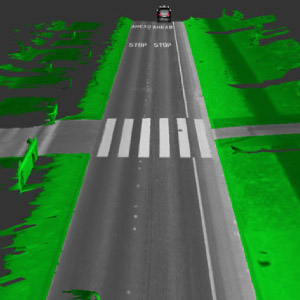

Robust Vehicle Localization in Urban Environments Using Probabilistic Maps

Jesse Levinson and Sebastian Thrun.

International Conference on Robotics and Automation (ICRA), 2010.

We extend previous work on localization using GPS,

IMU, and LIDAR data by modeling the environment as a probabilistic grid

in which every cell is represented as its own gaussian distribution over

remittance values. This approach offers higher precision, the ability

to learn and improve maps over time, and increased robustness to

environment changes and dynamic obstacles.

pdf,

bib

|

|

Exponential family sparse coding with application to self-taught learning

Honglak Lee, Rajat Raina, Alex Teichman, and Andrew Y. Ng.

International Joint Conference on Artificial Intelligence (IJCAI), 2009.

pdf,

bib

|

|

Map-Based Precision Vehicle Localization in Urban Environments

Jesse Levinson and Sebastian Thrun.

Robotics: Science and Systems (RSS), 2007.

GPS-based inertial guidance systems do not provide

sufficient accuracy for many urban navigation applications, including

autonomous navigation. We propose a technique for high-accuracy

localization of moving vehicles that utilizes maps of urban environments.

Our approach integrates GPS, IMU, wheel odometry, and LIDAR data to

generate high-resolution environment maps. We use offline GraphSLAM

techniques to align intersections and regions of self-overlap, and

a particle filter to localize the vehicle relative to these maps in

real time.

pdf,

bib

|